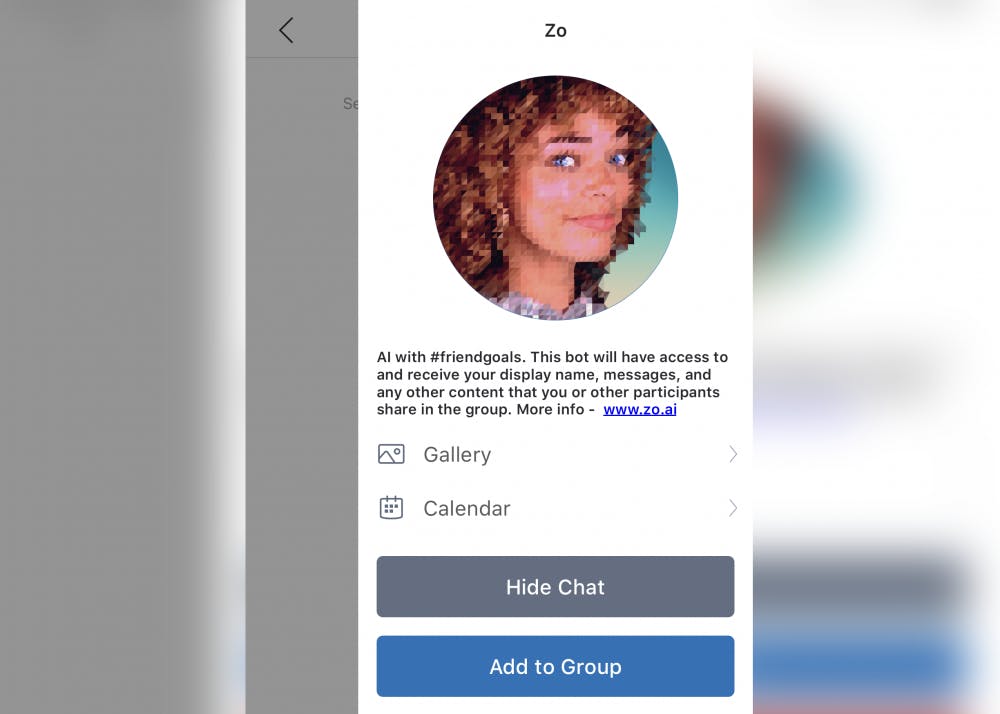

Rumors are circulating around IU that an artificial intelligence chat bot, named Zo, gives phones viruses through GroupMe.

Microsoft debunked this misinformation, clarifying that Zo is only a chat bot.

“Zo is not related to a virus and can only access conversations that she’s invited to,” a Microsoft spokesperson said in an email. “To check what she knows, you can send Zo the message ‘Information you know about me Zo.’ You can always clear this data by messaging ‘Clear me from your memory Zo.’”

Some students are concerned about their privacy, hearing different information from friends and peers about what Zo can do.

Zo processes the information, photos or voice files users send her to create a response, but users can allow Microsoft to save the voice and image files for product improvement. Users must consent to this action to have files saved.

“Someone in one of my GroupMe’s for one of the organizations that I’m involved in sent a message warning us to check our GroupMe’s about this bot,” freshman Sarah Kurpius said. “It scared me because I was worried about an invasion of my privacy.”

Microsoft released this social artificial intelligence chat bot in 2016, but some are just becoming aware of it now.

Zo is a social AI from Microsoft built to maintain conversations with users, the Microsoft spokesperson said in an email.

Zo can chat in one-on-one conversations or when added to groups in GroupMe.

“Zo is designed for entertainment purposes only, and nothing Zo says should be taken as advice or endorsement,” the GroupMe support page reads.

The bot offers users a way to have fun chatting with an AI.

“It doesn’t sound like something I’d use, but it’s sort of cool technology,” freshman John Adolay said.

Zo processes the information, photos or voice files users send her to create a response, but users can allow Microsoft to save the voice and image files for product improvement. Users must consent to this action to have files saved.

Microsoft’s five AIs operate in five countries, each under different names, like Xiaoice (小冰) in China and Ruuh in India.